Nintex Forms provide an intuitive interface for designers to quickly create form-enabled solutions.

However, some scenarios require us to go beyond the out-of-the-box capabilities. For example, we recently had a requirement involving two lists and the need to read in rate information from a second list. The complexity was that if a specific date field changes in one list, the form would have to refresh the rate information dynamically without closing the form.

The purpose of this blog post is to provide one post that contains the information you need to effectively enhance your Nintex Forms using JavaScript.

How To Enhance Nintext Forms With JavaScripts

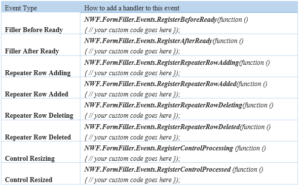

A Nintex Form is bound to both a SharePoint list and an associated content type within that list. To read from a second list requires the use of JavaScript. Form designers with JavaScript knowledge can now leverage the events shown below and are assured that their code runs safely as expected when these events occur during runtime. The following table summarizes the available events.

Embedding Custom JavaScript Within a Nintext Form

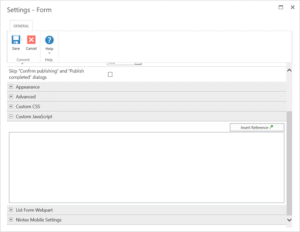

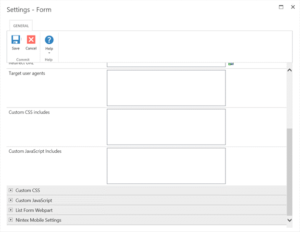

One of the simplest ways to include JavaScript within a Nintex form is to simply embed the JavaScript within the form itself. Within Form Settings, you will find a Custom JavaScript header under which you can include JavaScript. While this is a completely acceptable approach within the design of a single form, you may wish to consider using custom JavaScript includes if the JavaScript is to be reused.

CUSTOM JAVASCRIPT INCLUDES

This setting is found in Form Settings within the Nintex Forms Designer and is used for including custom JavaScript files at runtime. These includes can use local includes from within SharePoint such as ‘/SiteAssets/example.js’ or external includes by simply adding the URL to the desired JavaScript file.

BUTTON ACTIONS

With Nintex Forms Designer, you can drop a Button control onto the Form. By right-clicking on the button control and selecting Settings you can choose to set the Button action to JavaScript. Additionally, under the Advanced header of the control settings you can provide the JavaScript to execute when a user clicks the button under the Client click setting

STORING CLIENT ID IN JAVASCRIPT VARIABLES

This option creates a JavaScript variable that references the Client ID of the form control and is used for allowing data processing between the Nintex Form and JavaScript. These settings are found under the control settings. To allow this to happen you must set Store Client ID in JavaScript variable to Yes and you must set a corresponding JavaScript variable name beside Client ID JavaScript variable name.

client_id_in_javascript.pngFORM CONTROLS AND CUSTOM VALIDATION FUNCTIONS

Nintex Forms allows for custom validation of form controls based on JavaScript. Within the form control settings under the Validation header, set Use Custom Validation to Yes and provide the relevant JavaScript within the Custom Validation Function setting.

form_controls.pngFILLER AFTER READY

By default, a Nintex form will focus on the first enabled input control on the form. However, now you can switch the focus to a specific control using JavaScript. We have demonstrated with the examples below:

Set initial mouse cursor focus in the Single Line Text Control

1. Open the Nintex Forms designer and drag a Single Line Text control onto the canvas.\

2. Click on the control and locate the CSS class field in the Ribbon. Type ‘singlelineFocus’.

3. Click on the Nintex Forms tab, and click on the Settings button in the Ribbon.

4. Expand the Custom JavaScriptsection, and enter the following code:

NWF.FormFiller.Events.RegisterAfterReady(function ()

{

NWF$(‘.singlelineFocus input.nf-associated-control’).focus();

});

Click Save and then publish the form. When you add a new item, you should notice that the initial focus of the form has changed, and it is now occurring in the Single Line of text control

Set initial mouse cursor focus in the Date/ Time Control

1. Open the Nintex Forms designer and drag a Date/Time control onto the canvas.

2. Click on the control and locate the CSS class field in the Ribbon. Type ‘datetimeFocus’.

3. Click on the Nintex Forms tab, and click on the Settings button in the Ribbon.

4. Expand the Custom JavaScript section, and enter the following code:

NWF.FormFiller.Events.RegisterAfterReady(function ()

{

NWF$(‘. datetimeFocus input.nf-date-picker’).focus();

});

Click Save and then publish the form. When you add a new item, you should notice that the initial focus of the form has changed, and it is now occurring in the Date/Time control.

Set initial mouse cursor focus in the People Control

1. Open the Nintex Forms Designer and drag a People control onto the canvas.

2. Click on the control and locate the CSS class field in the Ribbon. Type ‘peopleFocus’.

3. Click on the Nintex Forms tab, and click on the Settings button in the Ribbon.

4. Expand the Custom JavaScript section, and enter the following code:

NWF.FormFiller.Events.RegisterRepeaterRowAdding(function () {

var repeaterControl = NWF$(arguments[0][0]);

if (repeaterControl.hasClass(‘expensesRepeater’)) {

}

});

Click Save and then publish the form. Add a new item, and click on Add New Row to add another row to the repeating section. A message should appear at the top of the form informing you that you are about to add a new entry. When you click OK on the message, the new row should will be added.

Repeater Row Added

You can trigger this event when you want to inform the user that a new row has been added to the repeating section.

1. Open the designer and drag a Repeating Section control onto the canvas.

2. Click on the control and locate the CSS class field in the Ribbon. Type ‘expensesRepeater’.

3. Click on the Nintex Forms tab, and click on the Settings button in the Ribbon.

4. Expand the Custom JavaScript section, and enter the following code:

NWF.FormFiller.Events.RegisterRepeaterRowAdded(function ()

{

var repeaterRow = NWF$(arguments[0][0]);

if(NWF$(repeaterRow.parents(‘.nf-repeater’)[0]).hasClass(‘expensesRepeater’))

{

alert(‘new row has been added to the expenses1 repeater’);

}});

Click Save and then publish the form. Add a new item, and click on Add New Row to add another row to the repeating section. A new row will be added to the repeating section and then a message box will appear.

Repeater Row Deleting

You can trigger this event with you want to inform the user they are deleting a row from the repeating section.

1. Open the designer and drag a Repeating Section control onto the canvas.

2. Click on the control and locate the CSS class field in the Ribbon. Type ‘expensesRepeater’.

3. Click on the Nintex Forms tab, and click on the Settings button in the Ribbon.

4. Expand the Custom JavaScript section, and enter the following code:

NWF.FormFiller.Events.RegisterRepeaterRowDeleting(function ()

{

var repeaterRow = NWF$(arguments[0][0]);

if(NWF$(repeaterRow.parents(‘.nf-repeater’)[0]).hasClass(‘expensesRepeater’))

{

alert(‘you are about to delete a row from the expenses1 repeater’);

}});

Click Save and then publish the form. Add a new item, and click on Add New Row to add another row to the repeating section. Next, click on the delete icon to delete a row. A message will tell you that the row is about to be deleted. When you click OK, the row will be deleted.

Repeater Row Deleted

You can trigger this event when you want to inform the user that a row from the repeating section has been deleted.

1. Open the designer and drag a Repeating Section control onto the canvas.

2. Click on the control and locate the CSS class field in the Ribbon. Type ‘expensesRepeater’.

3. Click on the Nintex Forms tab, and click on the Settings button in the Ribbon.

4. Expand the Custom JavaScript section, and enter the following code:

NWF.FormFiller.Events.RegisterRepeaterRowDeleted(function ()

{

var repeaterControl = NWF$(arguments[0][0]);

if(repeaterControl.hasClass(‘expensesRepeater’))

{

alert(‘you have just deleted a row from the expenses1 repeater’);

}

});

SharePoint CSOM Scripting in PowerShell

I was recently faced with a migration issue that left the documents in a document library mapped improperly to a lookup field in a SharePoint list.

The problem?

The migration tool did not preserve the original document ID’s when it migrated them to a new document library. Since the migration tool had no easy way to preserve the ID’s, I was left with the task of remapping the ID’s and fixing the lookup values post-migration.

I had my migration completely scripted with PowerShell and wanted to use PowerShell for the remapping as well.

I needed a way to access internal servers easily and wasn’t too concerned about performance – it’s a one-time script. Enter CSOM. I decided to write two PowerShell scripts – one that mapped the original ID of the item in the list with the document name, and one that read the list of documents, finds the new document ID of the document and associates it with the lookup column.

This works because the Migration tool is able to maintain list item id’s, i.e. source list item ID 10 will still be item ID 10 in the destination list – it just can’t do it for document libraries!

Let’s walk through creating the first script, “MapItemIdToDocumentTitle.ps1”

Step 1: Add command line parameters to the script

Fortunately, PowerShell makes this so easy:

param (

[string]$url = “https://sourcesiteurl”,

[string]$listName = “Source List Name”,

[string]$associatedListName = “Associated Document Library Name”,

[string]$associatedDocsFieldName = “Associated_x0020_Docs”,

[string]$outfile = “out.csv”

)

Just make sure these are the first lines in your PowerShell script.

Step 2: Add the CSOM references to your script

# the path here may need to change if you used e.g. C:\Lib..

Add-Type -Path “c:\Program Files\Common Files\microsoft shared\Web Server Extensions\15\ISAPI\Microsoft.SharePoint.Client.dll”

Add-Type -Path “c:\Program Files\Common Files\microsoft shared\Web Server Extensions\15\ISAPI\Microsoft.SharePoint.Client.Runtime.dll”

# note that you might need some other references (depending on what your script does) for example:

Add-Type -Path “c:\Program Files\Common Files\microsoft shared\Web Server Extensions\15\ISAPI\Microsoft.SharePoint.Client.Taxonomy.dll”

Step 3: Create the client context and load the web

# connect/authenticate to SharePoint Online and get ClientContext object..

$clientContext = New-Object Microsoft.SharePoint.Client.ClientContext($url)

if (!$clientContext.ServerObjectIsNull.Value)

{

Write-Host “Connected to SharePoint site: ‘$Url'” -ForegroundColor Green

}

$rootweb = $clientContext.Web

$childWebs = $rootweb.Webs

$clientContext.Load($rootweb)

$clientContext.Load($childWebs)

$clientContext.ExecuteQuery()

Note: Nothing is actually executed on the server until the “ExecuteQuery” method is called. This is the key to working with the CSOM – do stuff locally, then execute on the server. You will see this pattern throughout the code.

Step 4: Process the web (create the CSV file)

processWeb($clientContext.Web)

Where processWeb is the method that does all of the work:

function processWeb($web)

{

Grab the lists – remember, nothing happens on the server until ExecuteQuery is called:

$lists = $web.Lists

$clientContext.Load($web)

$clientContext.Load($lists)

$clientContext.ExecuteQuery()

In order to output CSV easily, all output is stored in an array

Write-Host “Processing web: ” $web.Title

$output = @()

Get all items from the source list (note: this works because the source list has less than 10k items). Again, notice the ExecuteQuery line – data is not retrieved from the server until this line executes.

$theList = $lists.GetByTitle($listName)

$docList = $lists.GetByTitle($associatedListName)

$query = [Microsoft.SharePoint.Client.CamlQuery]::CreateAllItemsQuery(9999)

$items = $theList.GetItems($query)

$clientContext.Load($items)

$clientContext.ExecuteQuery()

Now we just loop through each item finding items that have associated docs. I needed to go back to the server to load the actual document item and then again to get the actual file (so I could grab the file name).

foreach($item in $items)

{

$associatedDocs = $item[$associatedDocsFieldName]

if ($associatedDocs)

{

foreach($associatedDoc in $associatedDocs)

{

# get the actual file name from the associated list

$docItem = $docList.GetItemById($associatedDoc.LookupId)

$clientContext.Load($docItem)

$clientContext.ExecuteQuery()

$docFile = $docItem.file

$clientContext.Load($docFile)

$clientContext.ExecuteQuery()

$docName = $docFile.Name

Writing to CSV is picky!

You can output as many columns as you want, just use the “add-member” call with the NoteProperty membertype and name it appropriately. Append each row to the output.

$row = new-object PSObject

$row | add-member -membertype NoteProperty -name “ID” -value $item.ID

$row | add-member -membertype NoteProperty -name “FileID” -value $associatedDoc.LookupId

$row | add-member -membertype NoteProperty -name “FileName” -value $docName

$output += $row

}

}

}

Once we’ve completed the process, simply use the “export-csv” call and PowerShell does all the magic of writing the header and comma separated values. It’s really that easy!

$output | export-csv -NoTypeInformation -Path $outfile

Write-Host “Output saved to $outfile”

}

That’s it.

The second script I wrote is very similar, but reads the CSV file as input and remaps the documents. While writing these scripts, simply refer to MSDN for the CSOM reference: https://msdn.microsoft.com/en-us/library/office/dn268594.aspx

Hopefully, this post will help you get started with your CSOM PowerShell scripting. I love using PowerShell for this type of stuff – it’s super easy to write, easy to run and all it requires is a text editor.